This post is about using IBM Watson Visual Recognition service to classify images based on how we train it with positive and negative examples. Yes, we will train the service and let it classify some input images.

Requirements

- IBM Bluemix Account

- Java 8

- Watson SDK for Java

- Apache Maven

SDK for Java

We need this maven dependency in our pom.xml

[wp_ad_camp_1]

1 2 3 4 5 6 7 | ... <dependency> <groupId>com.ibm.watson.developer_cloud</groupId> <artifactId>java-sdk</artifactId> <version>4.0.0</version> </dependency> ... |

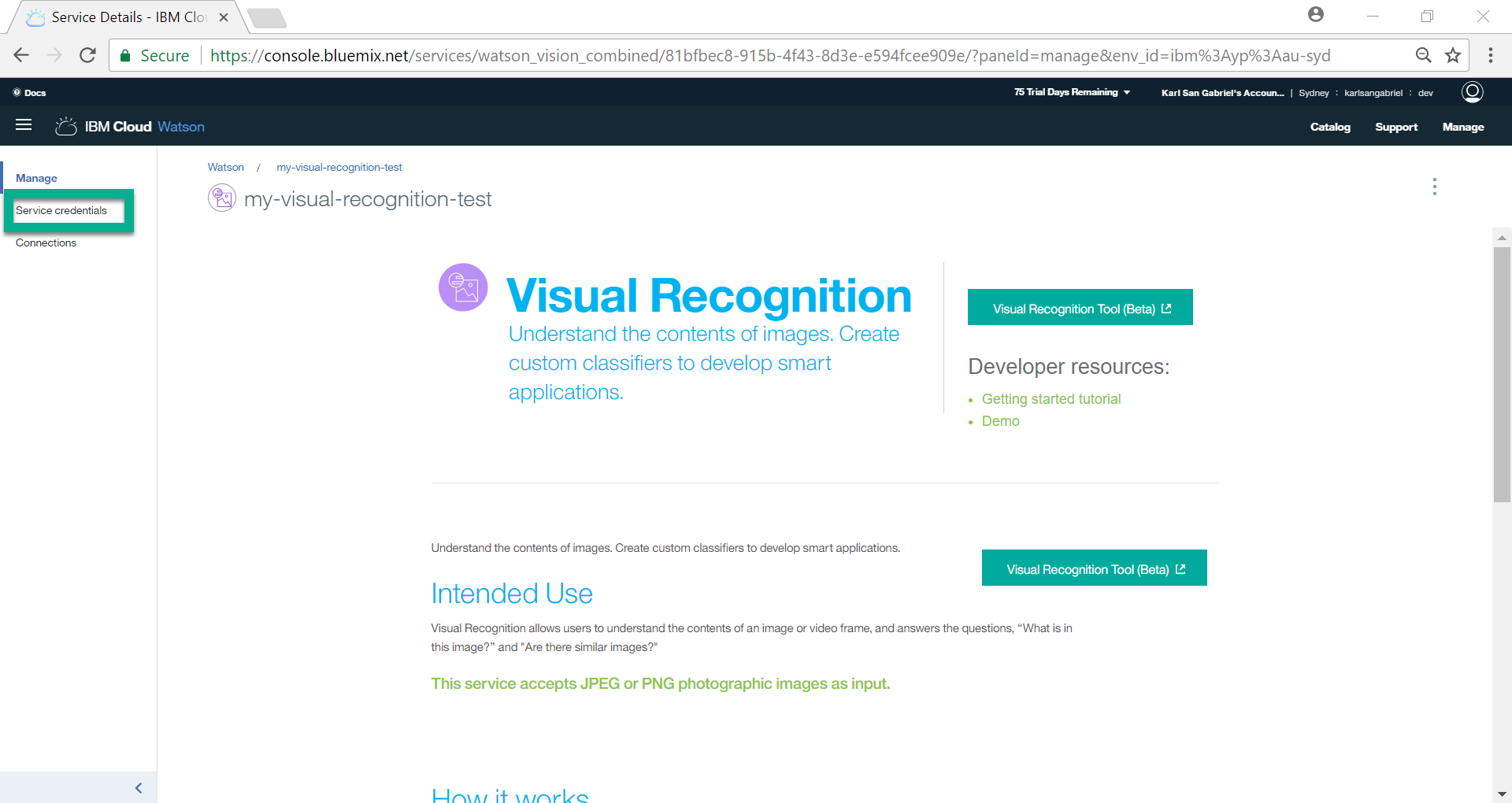

Provision a Visual Recognition Service

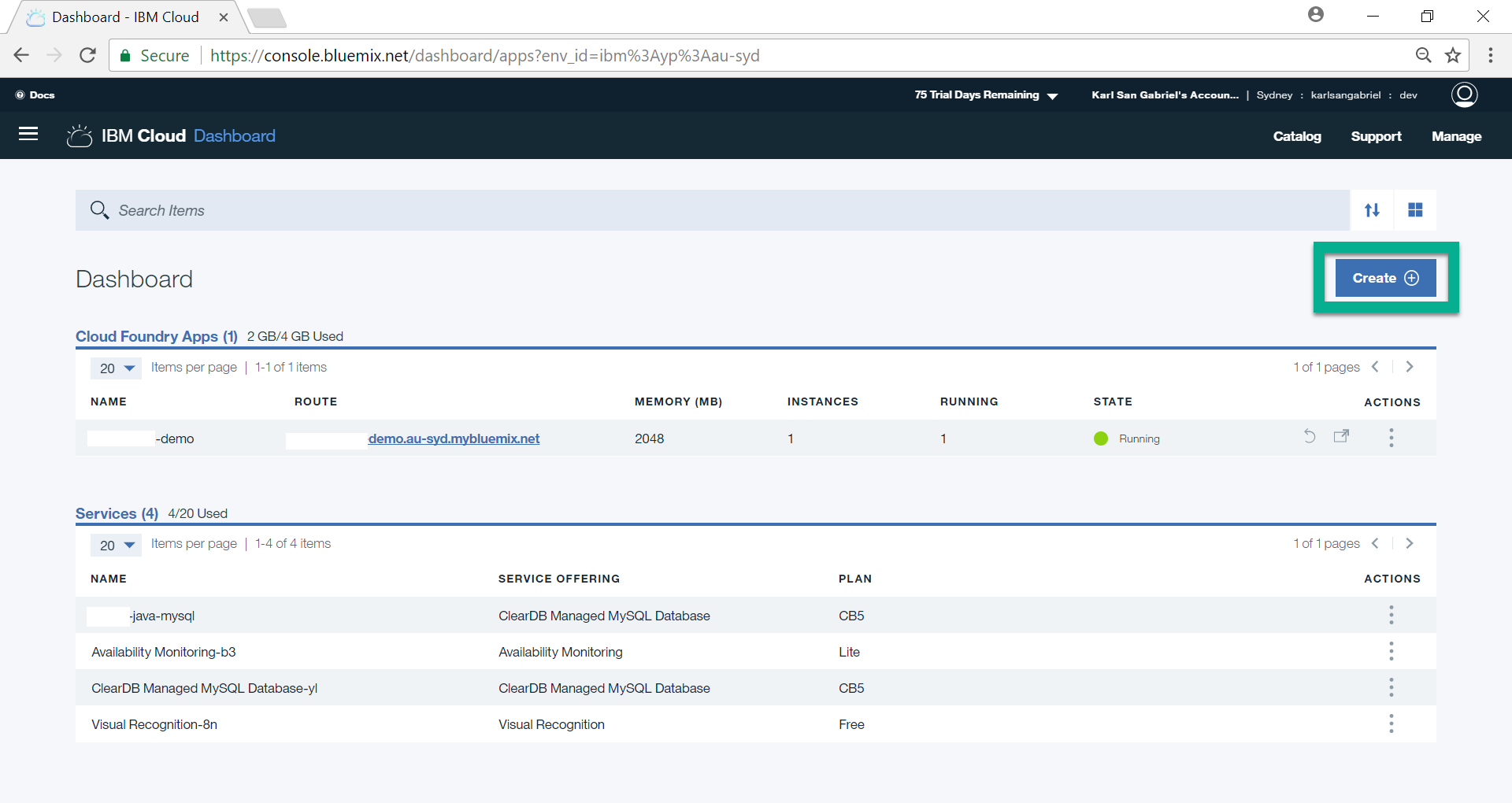

From our dashboard, click Create.

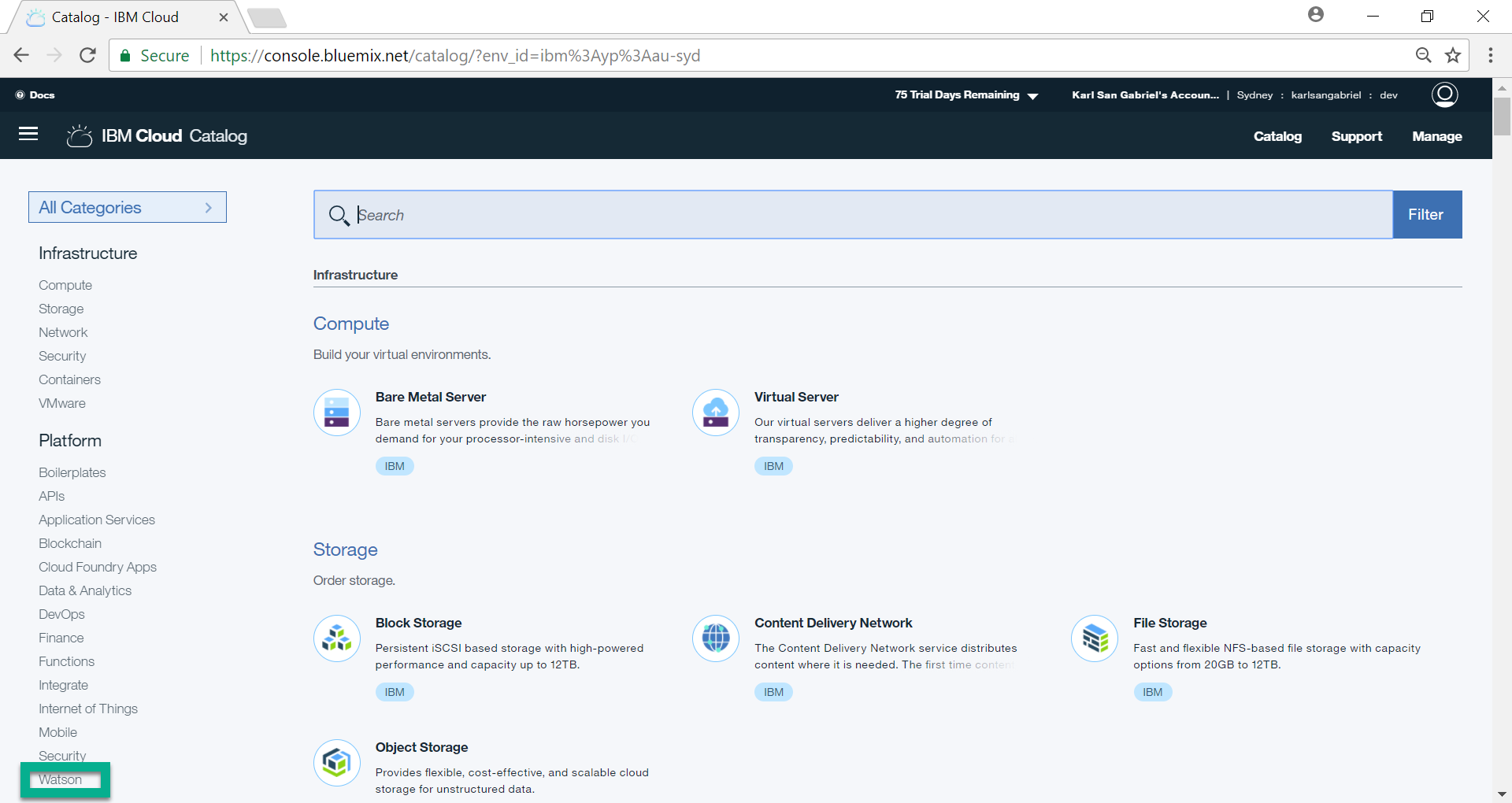

Click Watson menu option.

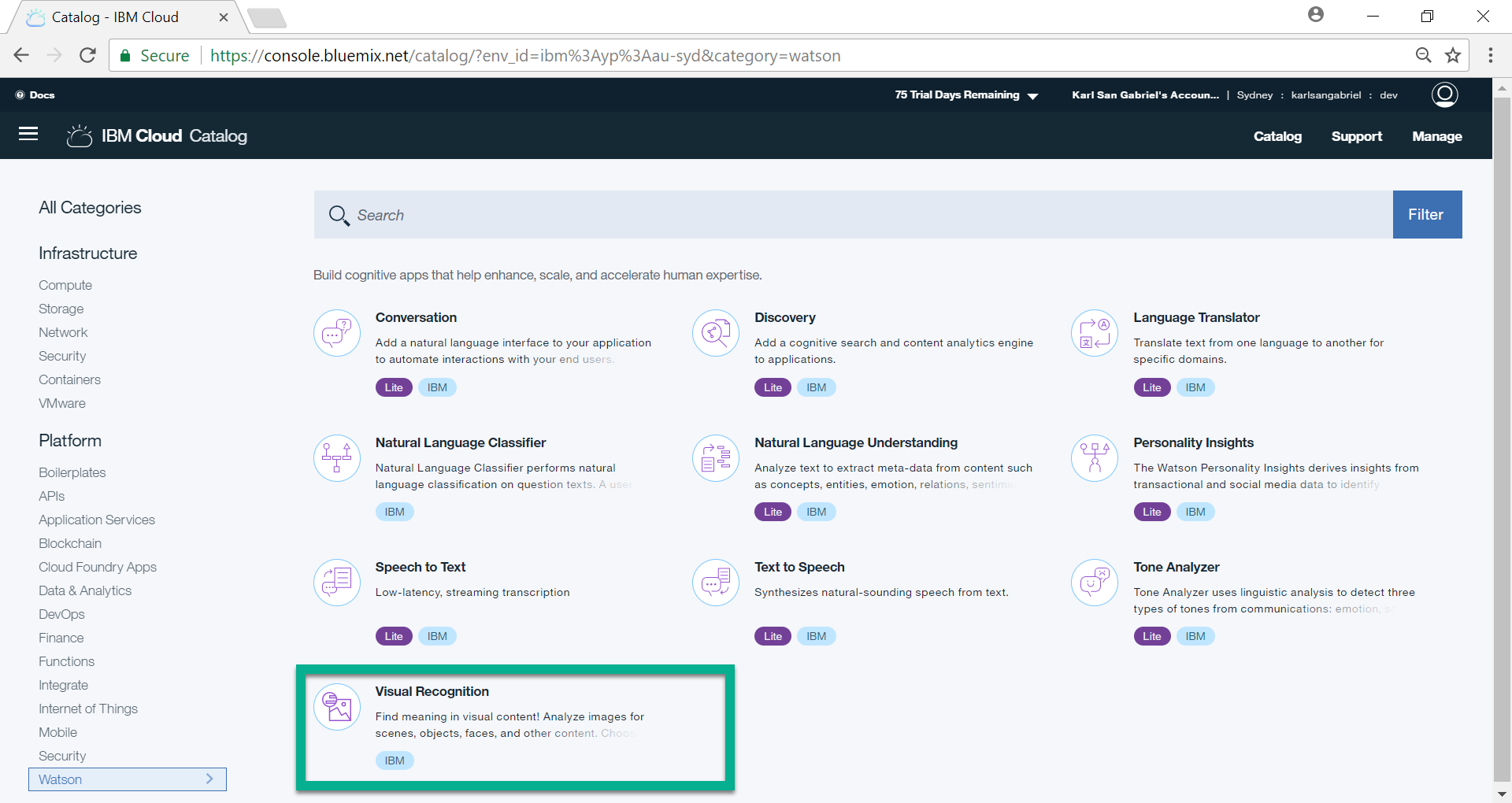

Then, choose Visual Recognition.

[wp_ad_camp_2]

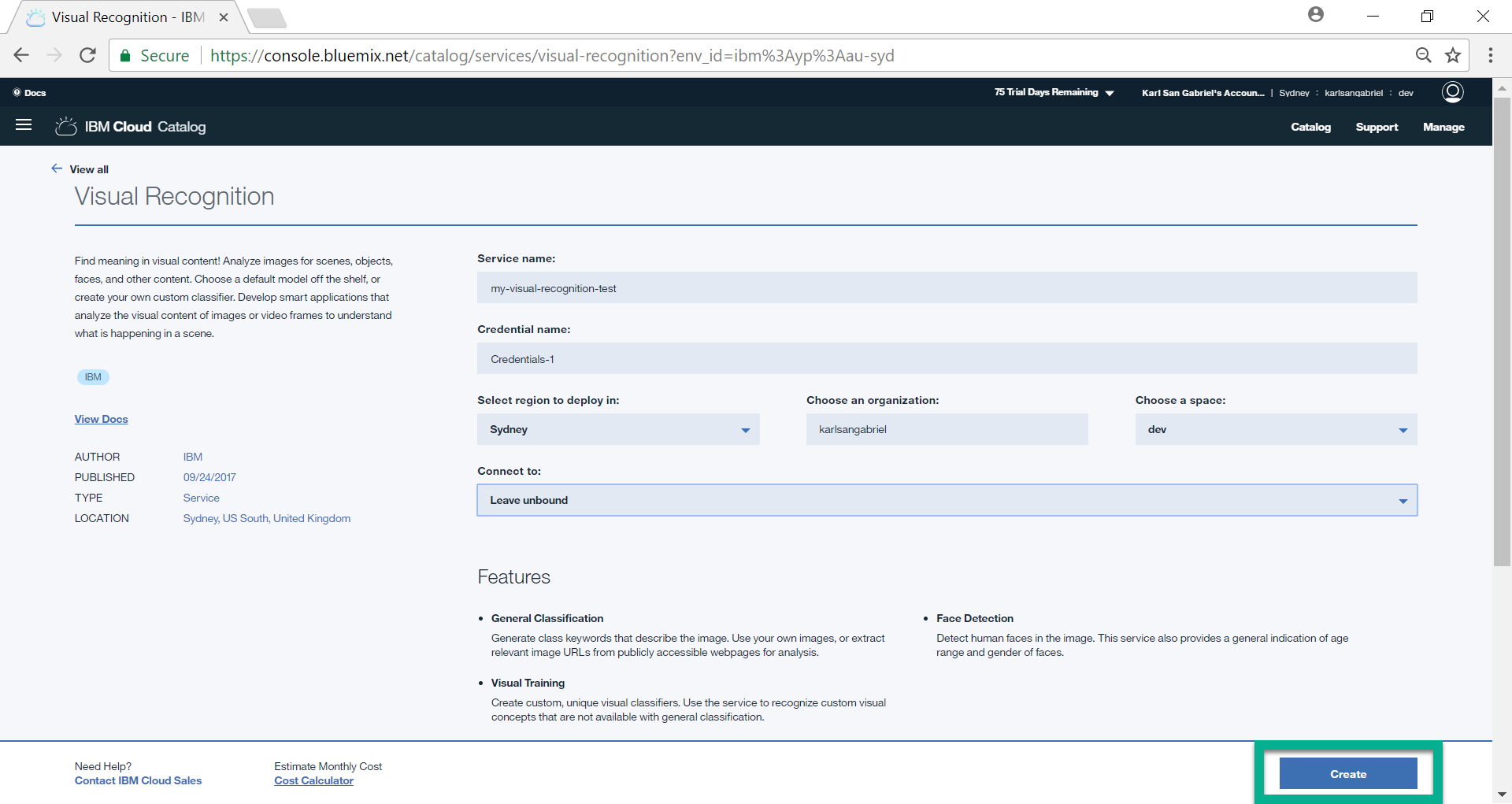

Provide a Service Name. For our purpose, we may leave the rest of the fields as they are. Then, click Create.

At this point, we successfully provisioned the service. Please note that for free accounts, we are only allowed to provision one instance of this service. Then, proceed to Service credentials.

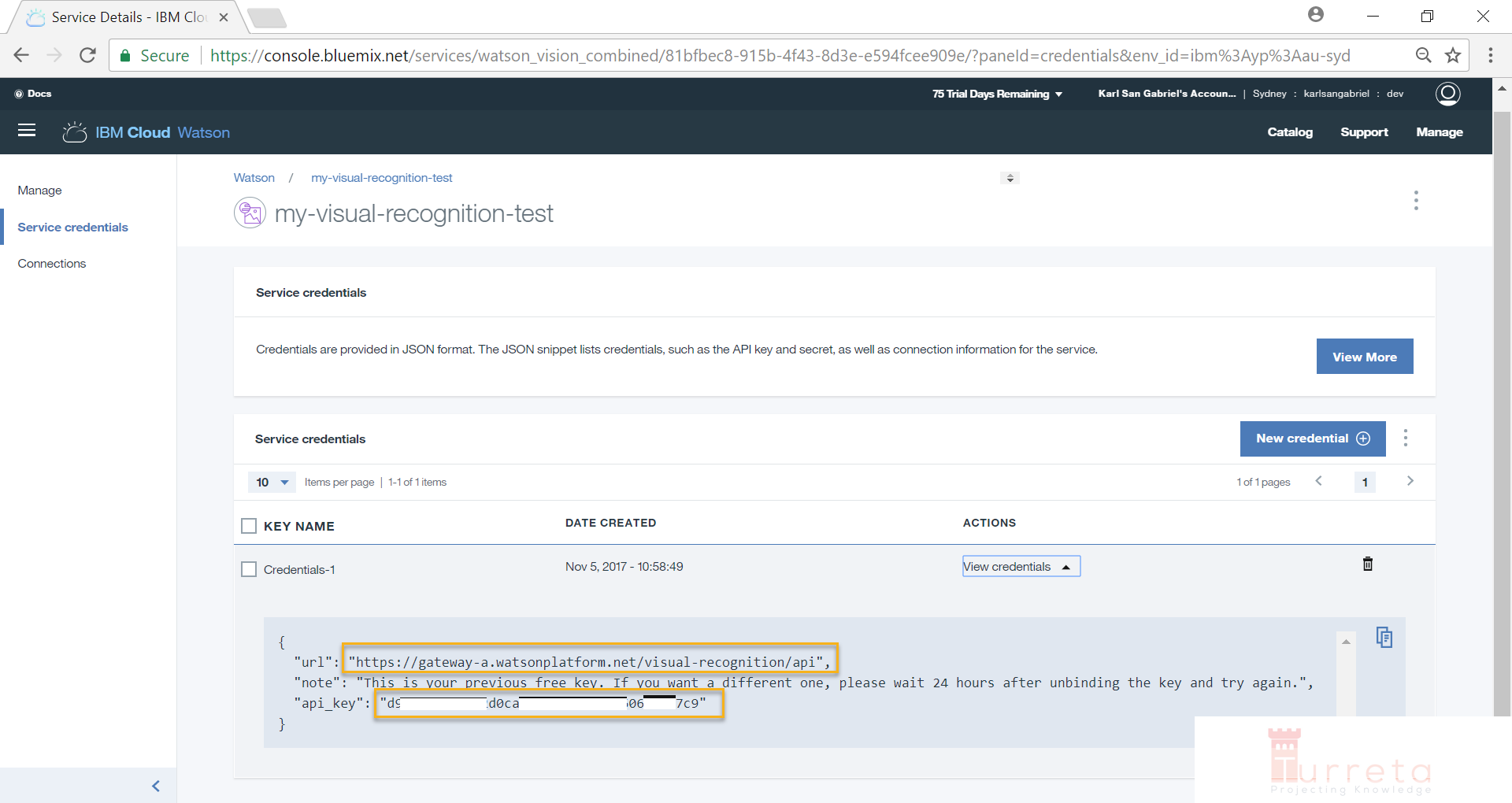

Take note of the url and api_key. We will use them in our Java codes.

[wp_ad_camp_3]

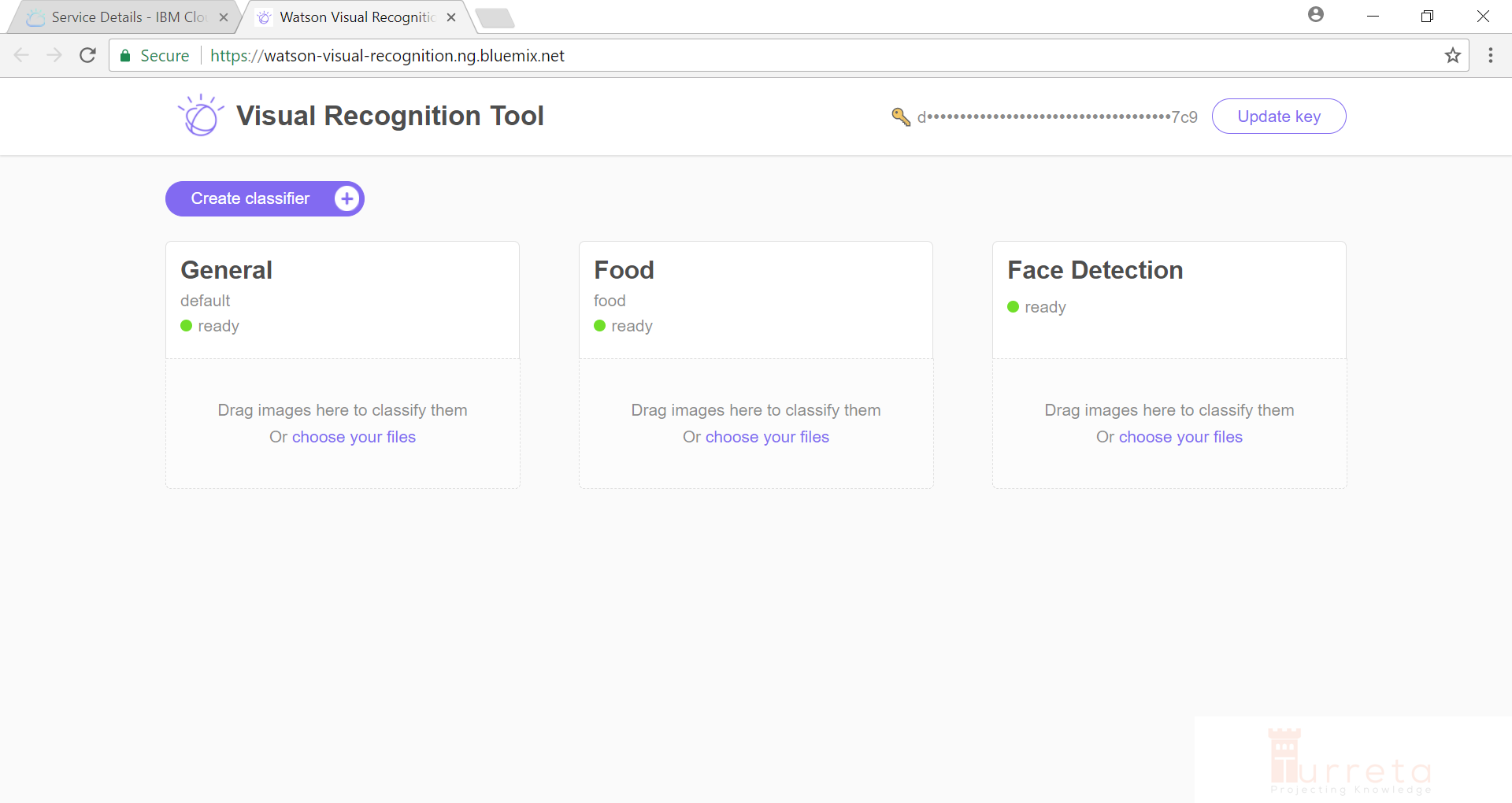

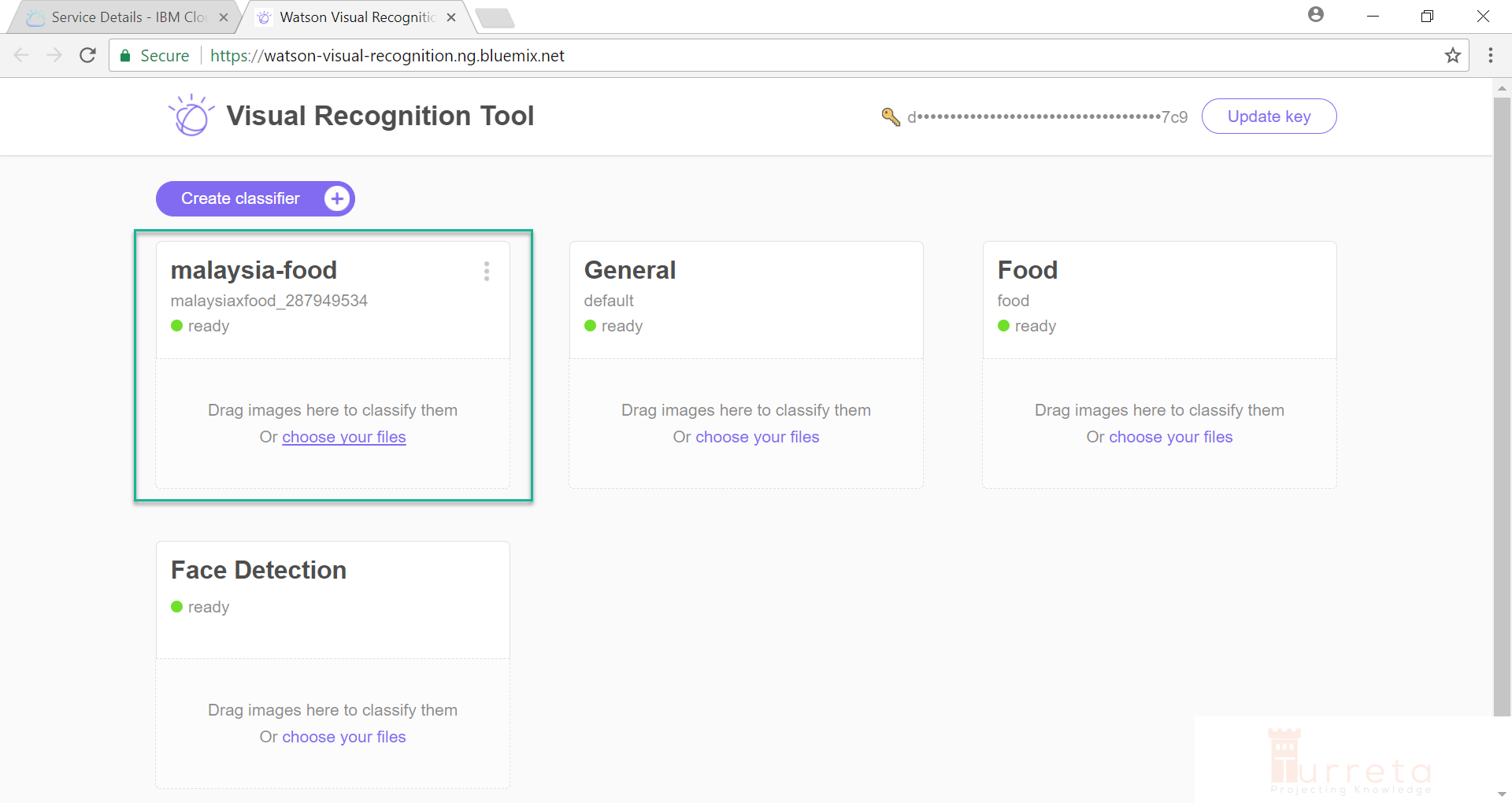

Visual Recognition Tool

We may train our service using the Visual Recognition Tool (https://watson-visual-recognition.ng.bluemix.net/). It allows for the creation of classifiers and upload of associated image files. But for our case, we will create classifiers and upload images via Java codes.

Java Codes

Our Service

We have a class annotated with @Service that wraps the VisualRecognition class from the SDK and simply delegate method calls to appropriate methods.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | ... @Service public class WatsonVisualRecognitionService { private VisualRecognition visualRecognition; @PostConstruct public void initService() { if(visualRecognition == null) { // Default endpoint is https://gateway-a.watsonplatform.net/visual-recognition/api visualRecognition = new VisualRecognition( VisualRecognition.VERSION_DATE_2016_05_20, "d948f9300-deleted-already-f60693a47c9"); } } public void trainService(CreateClassifierOptions createClassifierOptions) throws Exception { visualRecognition.createClassifier(createClassifierOptions).execute(); } public ClassifiedImages classify(ClassifyOptions classifyOptions) throws Exception { return visualRecognition.classify(classifyOptions).execute(); } } |

Training the Service

[wp_ad_camp_4]

We are training the service by uploading images of “nasilemak” for it to know what the food is visually. We also upload images not of “nasilemak” for the service to know what the food is not.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | ... public static void main(String[] args) throws Exception { ApplicationContext context = SpringApplication.run(TurretaWatsonVisualRecognitionApplication.class, args); WatsonVisualRecognitionService service = context.getBean(WatsonVisualRecognitionService.class); // Upload zip files from resources directory CreateClassifierOptions createClassifierOptions = new CreateClassifierOptions.Builder() .name("malaysia-food") .addClass("nasilemak", Paths.get( ClassLoader.getSystemResource("nasi-lemak_postive_examples.zip").toURI()) .toFile()) .negativeExamples( Paths.get( ClassLoader.getSystemResource("negative_examples.zip").toURI()) .toFile()) .build(); service.trainService(createClassifierOptions); } ... |

Please note that file name format of the zip files. They mean something:

- _positive_examples contains images of what “nasilemak” is visually.

- negative_examples contains images of that “nasilemak” is not visually.

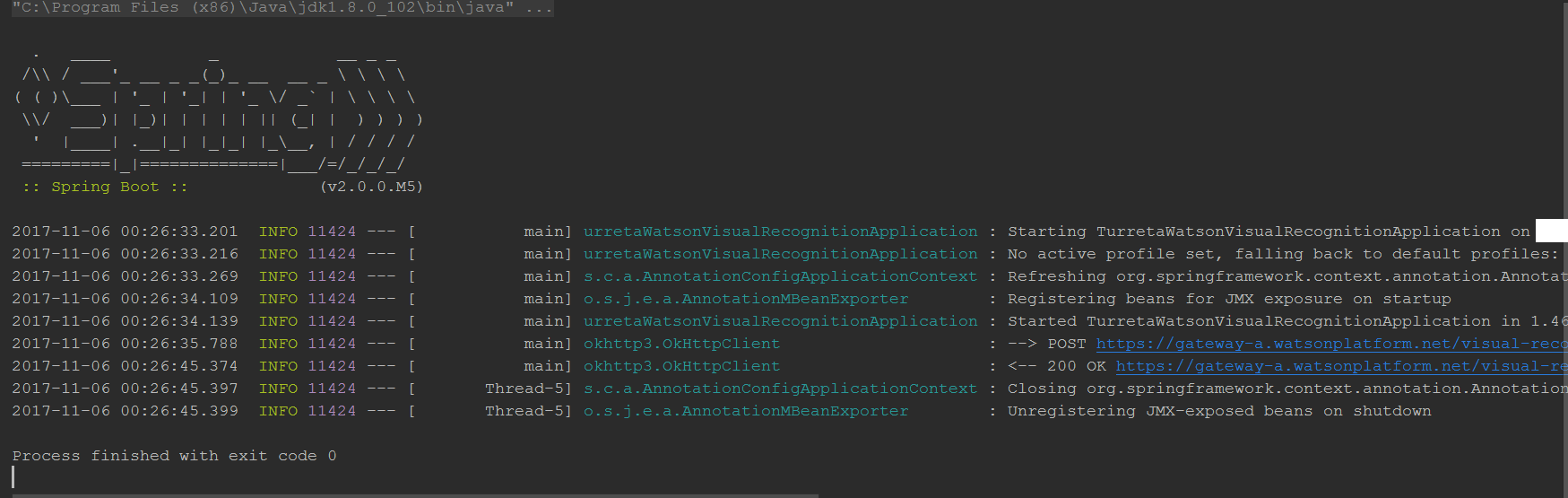

If we run the codes above, we get this output in the console.

If we go to to the Visual Recognition Tool, we see our new classifier.

Testing the Service

For testing, we only use our own classifiers, e.g., malaysia-food with classifier-id malaysiaxfood_287949534.

[wp_ad_camp_5]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | ... public static void main(String[] args) throws Exception { ApplicationContext context = SpringApplication.run(TurretaWatsonVisualRecognitionApplication.class, args); WatsonVisualRecognitionService service = context.getBean(WatsonVisualRecognitionService.class); ClassifyOptions classifyOptions = new ClassifyOptions.Builder().imagesFile( Paths.get( ClassLoader.getSystemResource("nasi-lemak-typical-indonesian-food-bali-27670807.jpg") .toURI()) .toFile()) .parameters("{\"classifier_ids\":[ \"malaysiaxfood_287949534\"]}").build(); ClassifiedImages classifiedImages = service.classify(classifyOptions); System.out.println(classifiedImages); } |

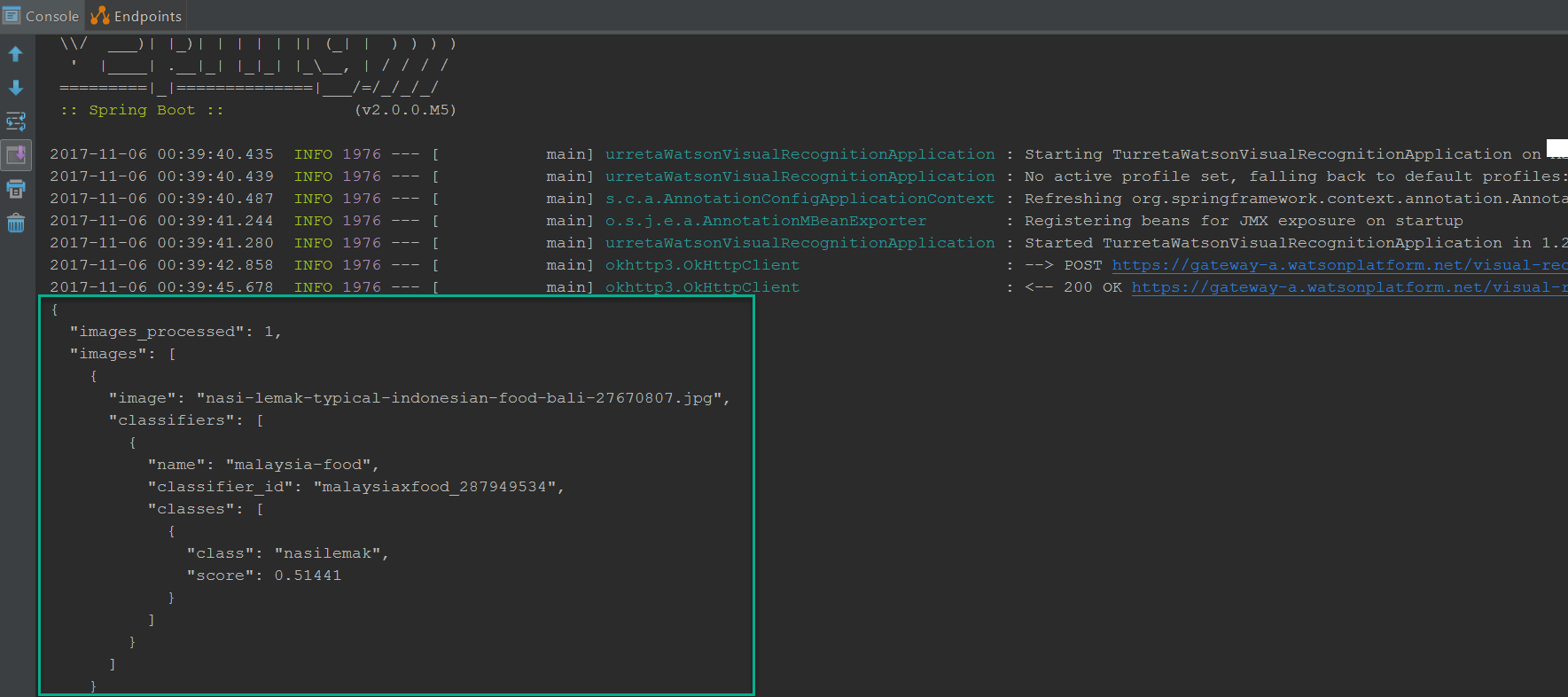

The input image file to classify using our service is “nasi-lemak-typical-indonesian-food-bali-27670807.jpg”. Notice, we passed our classifier id as a JSON string to the parameters method.

These codes generate the following output on the console.

Download the codes

https://github.com/Turreta/Using-IBM-Watson-Visual-Recognition-to-classify-Images

![]()